https://nmn.gl/blog/vibe-coding-fantasy

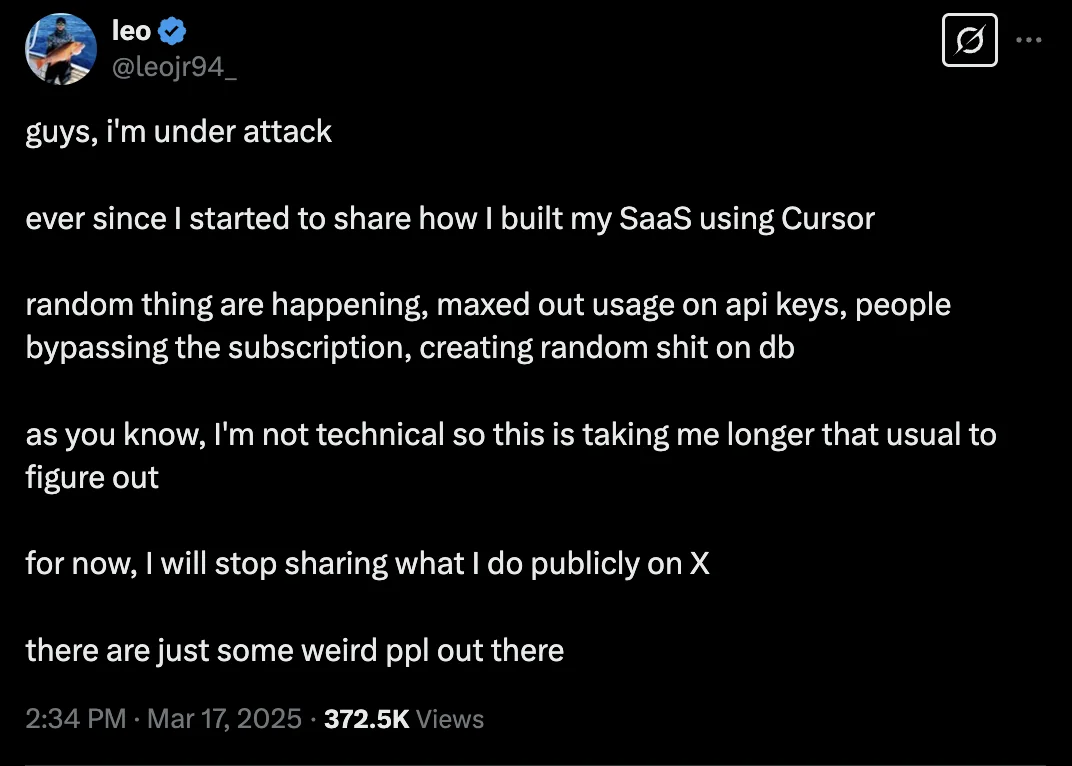

Last week, X exploded when a “vibe coder” announced his SaaS was under attack.

His business, built entirely with AI assistance and “zero hand-written code,” was experiencing bypassed subscriptions, maxed-out API keys, and database corruption.

His follow-up admission made this notable: “as you know, I’m not technical so this is taking me longer than usual to figure out.”

As someone deeply immersed in the AI code generation space, I’ve been watching this unfold with a mix of sympathy and frustration. Let me be clear — I’m not against AI-assisted development. My own tool aims to improve code generation quality. But there’s a growing and dangerous fantasy that technical knowledge is optional in the new AI-powered world.

After observing many similar (though less public) security disasters, I’ve come to a controversial conclusion: vibe coding isn’t just inefficient — it’s potentially catastrophic.

Recently, I was working late on a particularly thorny code generation problem when a message from an old SF friend popped up: “Dude, have you seen this? I just launched my side project without writing a single line of code. Just vibe coding!”

He shared his screen with me — a surprisingly polished-looking SaaS product that helped small business with their career path. The UI was clean and the features worked. All built by telling Windsurf what he wanted, occasionally getting frustrated, refining his prompts, and never once understanding the underlying technology.

“That’s great,” I said, genuinely impressed. “What security measures did you implement?”

His blank stare told me everything.

Some time later, his API keys were scraped from client-side code that AI had carelessly left exposed. He had to negotiate with OpenAI to forgive his bill.

Today, his “side project” is offline while he’s trying to learn authentication and security from first principles.

The vibe coder’s dream turns into a nightmare not when the code doesn’t work, but when it works just well enough to be dangerous.

The problem isn’t that AI tools can’t generate secure code — they often can, with the right prompts. The problem is that without understanding what you’re building, you don’t know what you don’t know.

I witnessed this firsthand when helping another friend debug his AI-generated SaaS for teachers. Looking through the code, I discovered: